Ontosight® – Biweekly NewsletterJune 17th – June 30th, 2024 –Read More

Ontosight® – Biweekly NewsletterJune 17th – June 30th, 2024 –Read More

May 24

Apr 24

Innoplexus wins Horizon Interactive Gold Award for Curia App

Read More

D3 ENGINE

Collaborative Approach

Innoplexus team works together with its customers to ensure optimal integration with customer processes. Before starting any new project, a scoping workshop is held with the customer to outline their major requirements and define the exact project deliverables. As a next step, a proposal document containing the project background/context, approach, deliverables, project governance, timeline and cost is submitted for customer review and approval. Upon proposal acceptance by the customer, a Master Service Agreement (MSA) and/or a Statement of Work (SoW) document is signed between the parties. As soon as the Project Order (PO) is received by Innoplexus, the project kick-off and project delivery is planned and executed as agreed.

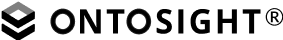

Iterative way of working

Innoplexus teams follow the agile methodology. As a first step, a minimal viable product is delivered, which is incrementally built-up, based on advanced requirements and customer feedback incorporation at regular intervals as part of milestone/project delivery meetings, thereby, improving the desired solution/end-product.

Frequent Touchpoints

Innoplexus and customer teams connect regularly to ensure alignment on goals and monitor project progress. This is ensured with the help of bi-weekly meetings for regular projects (4-6 weeks duration) and in addition, monthly steering committee meetings for bigger, complex projects (26-30 weeks duration).

It is an important step in the scientific method and is commonly used in data analysis and research.In the context of quality assurance, hypothesis generation involves formulating hypotheses about potential problems or issues that may be affecting the quality of a product or process. This can be based on previous experience, data analysis, or other sources of information.

The process of hypothesis generation typically involves the following steps:

For you, intuitive interfaces are critical for ensuring that the software or product being developed is easy to use and meets the needs of the end-users. The Quality Assurance team is responsible for testing the user interface and ensuring that it meets the organization’s standards for usability and accessibility.Our automated testing process covers 90% of our Products to proactively avoid errors.

To provide you with good quality data and products, the QA team always does Manual Testing to assure the functionality working properly, API Testing for validation of API and its data, Application Security Testing to maintain the security of data & privacy, Performance Testing to ensure the scalibilty of application at the peak usage. We are always open to Your feedback for usability and Acceptance testing.By focusing on intuitive interfaces, the QA team can help to ensure that the software or product being developed is user-friendly, accessible, scalable, secure and meets your needs . Our Vision is to to increase user satisfaction, improved user adoption, and ultimately, increased business success.

![]()

| Cookie | Duration | Description |

|---|---|---|

| cookie-checkbox-analytics | 11 months | The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookie-checkbox-functional | 11 months | The cookie is set to record the user consent for the cookies in the category "Functional". |

| cookie-checkbox-necessary | 11 months | The cookies are used to store the user consent for the cookies in the category "Necessary". |

| cookie-checkbox-others | 11 months | This cookie is used to store the user consent for the cookies in the category "Other. |

| cookie-checkbox-performance | 11 months | The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is used to store whether or not a user has consented to the use of cookies. It does not store any personal data. |